Building a LangChain.js SQL agent for Presto

The progression of Large Language Models (LLMs) in the past few months has been inspiring to say the least. Their impact on communication platforms can be significant if reliable and simple workflows are implemented. We shipped Tailor, our AI assistant, a few months ago and have seen amazing results thus far. We have been actively iterating on LLM based tools ever since.

At the moment, we are exploring how agenic LLMs can improve Threads by automating complex tasks, providing feedback, and more. We wanted to better understand the benefits and limitations of these LLMs in a controlled production environment to help us gauge how such systems can be utilized in a scalable/reliable way to provide value to our users.

Our first experiment was to create an internal tool agent that you can ask questions to in natural language, and it queries a segment of our mysql DB using Presto (ex. Which orgs had the most posted threads in the past 7 days, list the orgIDs...etc).

This experiment allows us to think about how users are expected to interface with these agents, what it takes to run an agent in prod, and how reliable such a seemingly simple agent works.

Below is the step-by-step process of how we implemented this sql agent and what we've found to be its advantages and limitations.

Below is a simplified version of our InternalPrestoIntegration class that uses the presto-client library. In prod, we add logging and error tracking around this class.

runQuery is the most dangerous function here as it runs arbitrary SQL queries. We could do some validation to ensure that it only runs SELECT/DESCRIBE (and SHOW TABLES) queries on ALLOW_LISTED_TABLE_NAMES , but that didn't feel worth implementing right now as this is an experimental internal feature on a read-only non-private data DB.

LangChain.js setup

Unfortunately, we are using Node16 and LangChain.js is officially only supported on Node18. We will be upgrading our node version in the next few weeks, but for this experiment we had to implement temporary hacks to make LangChain.js work for us. We simply just had to call the setupLangChainForNode16() function prior to importing or using any LangChain code path (more context).

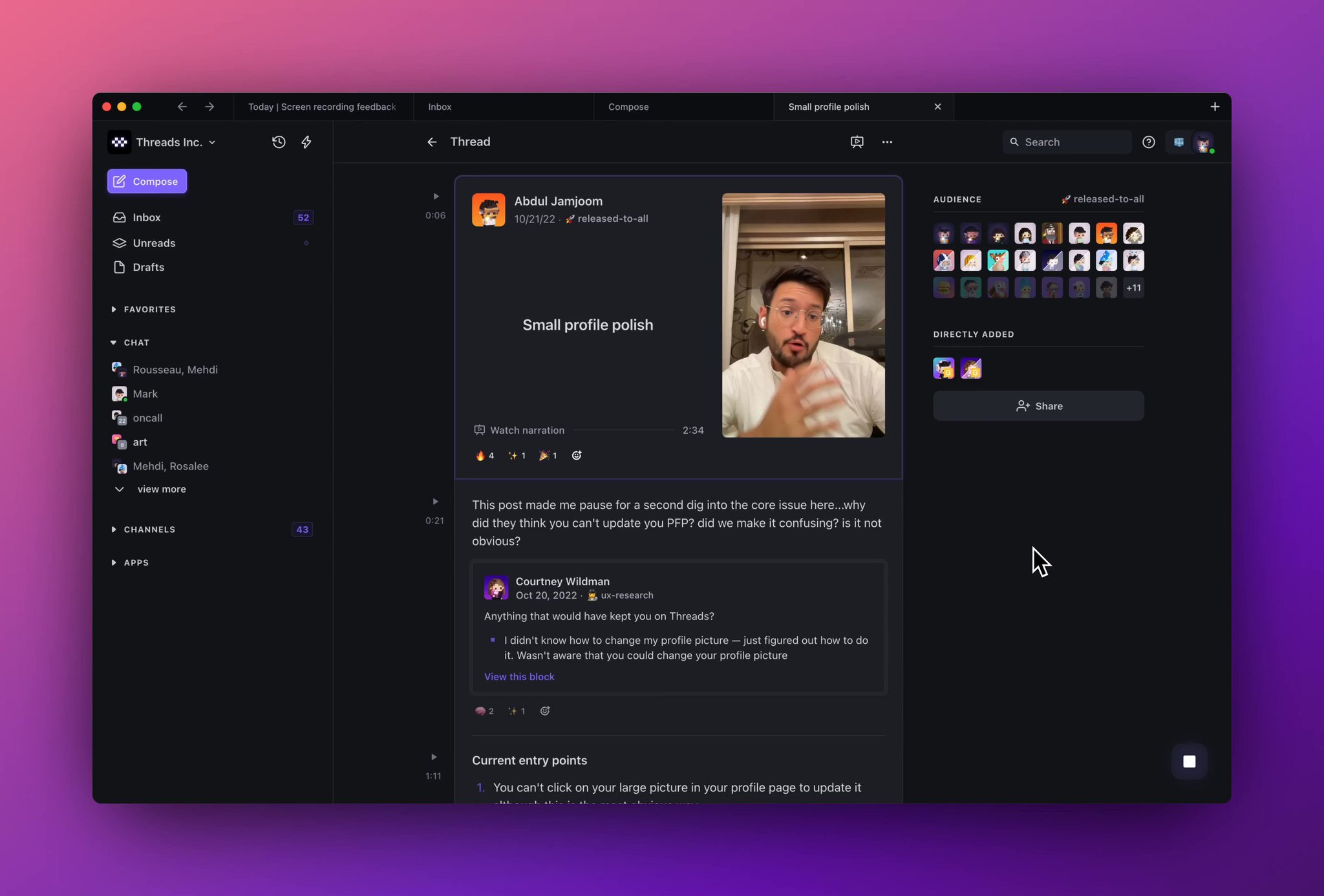

Interface setup

We have an internal tool that allows you to chat with Tailor, our AI assistant, and trigger different agents with certain flags. For example, prefixing your message with sql triggers the sql-agent code-path. We also allow you ask follow-up questions by prefixing your message with follow-up . We are aware that this is not an ideal interface as it doesn't represent the memory the agent uses well, though it's sufficient for internally testing agenic LLMs.

respond to this tweet if you are interested in building agents on Threads (q&a bots) or if you have use-cases you are excited to see.

respond to this tweet if you are interested in building agents on Threads (q&a bots) or if you have use-cases you are excited to see.

Agent setup

The agent has access to three tools

get_visible_tables→ What tables do I have access to?describe_table→ How can I query this table?run_sql_query→ How do I get the data the user wants? This tool runs theSELECTquery

Additionally, the agent has memory, and you can pass it a list of older messages that it keeps in context. This is what allows us to support follow-up questions.

The code below is the skeleton of the agent; we'll explore improvements later on. If you run this, you'll see that the agent rarely correctly runs a properly formatted SQL query. It hallucinates tables and columns and quickly gets stuck on error states.

We need the agent to follow this logical flow:

- Always start by calling

get_visible_tablesto understand what tables exist - Then decide which tables are important to query and call

describe_tableon all of them to understand how to query them - Finally, use the

run_sql_querytool to run aselectquery on a table that you have already described

We told the agent to do exactly that by updating the tool descriptions to the following:

get_visible_tables→ Call this to get a list of all the presto sql tables you can see. You should always use this tool prior to using any other tool.describe_table→ Call this to run a sql presto describe table query. You must pass in a tableName as input. Always call this prior to using the run_sql_query tool if you haven't done so already.run_sql_query→ Call this to run a valid sql presto select query. Do not use on arbitrary tables or columns; always validate what tables/columns you have access to prior to querying them by calling the get_visible_tables and describe_table tools you haven't already.

If you run the agent again, you'll see that it's much better at running valid queries and returning reasonable results.

Tool hydration

The issue now seems to be that the agent doesn't understand what data each table contains and what some of the columns mean. It doesn't always pick the correct tables to describe or misuses some of the columns. This is logical as it only has access to names/types without any description of what the tables/columns actually store. Some tables/columns are easy for the model to understand, but sometimes the names are even ambiguous to a human who isn't familiar with our stack.

Our fix was to treat the agent as a new junior engineer who has no idea what these tables/columns do. We wrote a short_description and a long_description for each table that explains ambiguous columns and generally what the table stores. Moreover, we added a blurb for any concepts/connections in the data we use (ex. blocks are the atomic unit of async content...etc).

We then updated the get_visible_tables tool such that it injects the short_description for each table — contains a general blurb of what type of data the table has. We then also updated the describe_table tool to inject the long_description to the queried table such that any ambiguous fields/connections are clarified. Doing this significantly improved the relevant table selection step for the agent (what tables to call describe_table on?), thus making the final select queries more accurate.

reflection

The sql-agent proved to be useful for helping structure complex SQL queries, especially after we've added manually written descriptions. The agent still tends to occasionally generate buggy queries and requires a bunch of follow-ups to get you what you are asking for.

Additionally, we don't do any compression right now, so after a bunch of back-and-forth, the agent fatals if the input text is too long. There are certainly some flaws in this system and we believe that agenic LLMs will require a lot more work to be reliable enough for public production features.

We plan on investing more time on agenic LLMs exploring their benefits and limitations. We will be sharing more on this soon. In the meantime, check out our blog and follow us on Twitter for more updates: